Accurate ego-motion estimation is a critical component of any autonomous system. Conventional ego-motion sensors, such as cameras and LiDARs, may be compromised in adverse environmental conditions, such as fog, heavy rain, or dust. Automotive radars, known for their robustness to such conditions, present themselves as complementary sensors or a promising alternative within the ego-motion estimation frameworks. In this paper we propose a novel Radar-Inertial Odometry (RIO) system that integrates an automotive radar and an inertial measurement unit. The key contribution is the integration of online temporal delay calibration within the factor graph optimization framework that compensates for potential time offsets between radar and IMU measurements. To validate the proposed approach we have conducted thorough experimental analysis on real-world radar and IMU data. The results show that, even without scan matching or target tracking, integration of online temporal calibration significantly reduces localization error compared to systems that disregard time synchronization, thus highlighting the important role of, often neglected, accurate temporal alignment in radar-based sensor fusion systems for autonomous navigation.

An illustration of a radar-inertial odometry system with imperfect radar-IMU data arrival. We estimate the temporal offset between radar measurements and IMU data and optimize it within the factor graph framework.

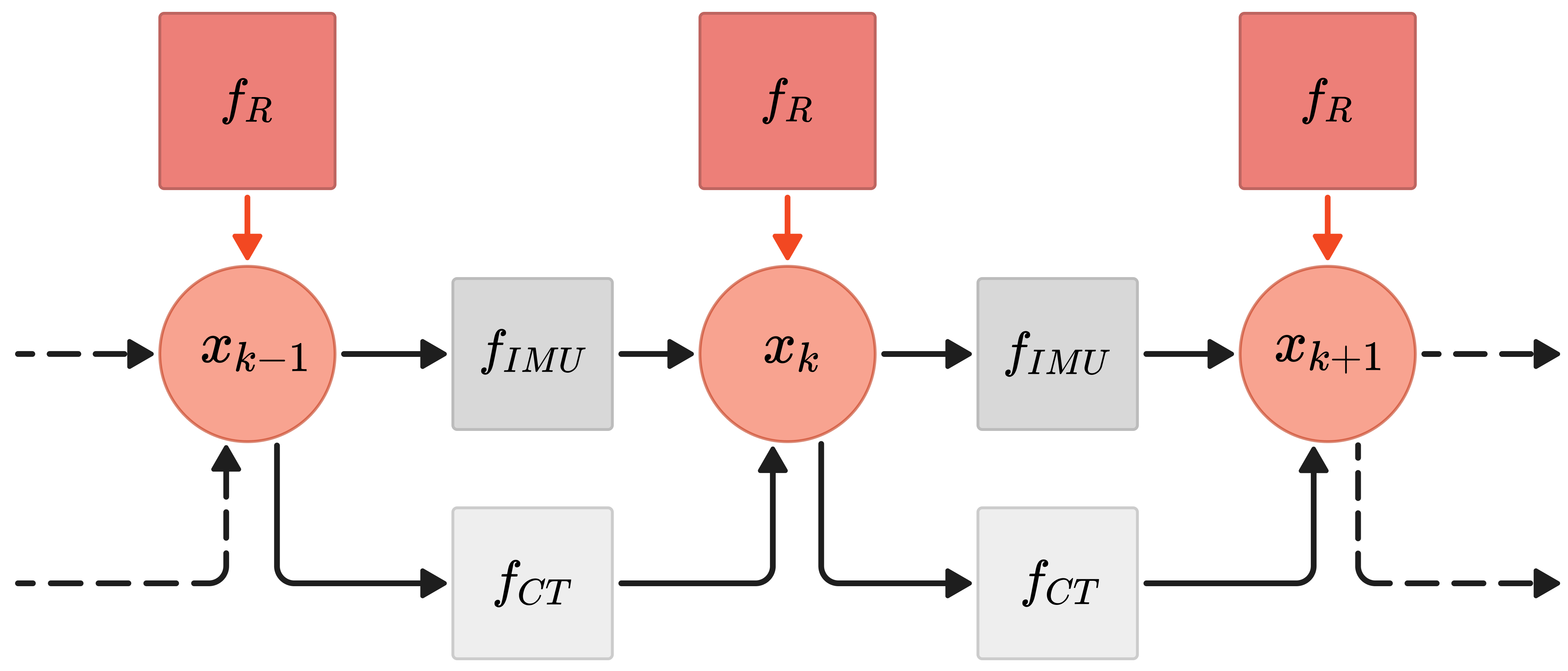

Proposed factor graph structure. The factor graph consists of the IMU factor (fIMU), the radar ego-velocity factor (fR), and constant time offset factor (fCT).

The proposed method, dubbed RIO-T (Radar-Inertial Odometry with Temporal Offset Calibration), estimates odometry using a loosely coupled radar-inertial sensor fusion approach implemented within a factor graph optimization framework. The method imposes no assumptions on the environment, such as the presence of planar surfaces, i.e., ground plane estimation. The core idea is to adjust the radar ego-velocity factor by compensating for the velocity difference using the most recent IMU acceleration, with having the bias removed and measurement corrected for gravity, and scaled by the time-offset state. The assumption of constant acceleration around the last acceleration measurement provides a robust and accurate real-time estimate of ego motion while estimating the time lag between radar and IMU measurements.

Convergence of the temporal offset in the Gym sequence from the IRS dataset using the proposed method. The radar measurements were artificially shifted forward in time in steps of 2.5 ms to check whether the proposed method converges correctly to the expected offset values.

Convergence of the temporal offset in the Mocap difficult sequence from the IRS dataset using the proposed method. The radar measurements were artificially shifted by 112.15 ms to simulate the time delay of a radar of 100 ms as estimated temporal offset was -12.15 ms. We tested our method over three iterations to evaluate its convergence ability even with large temporal offsets.

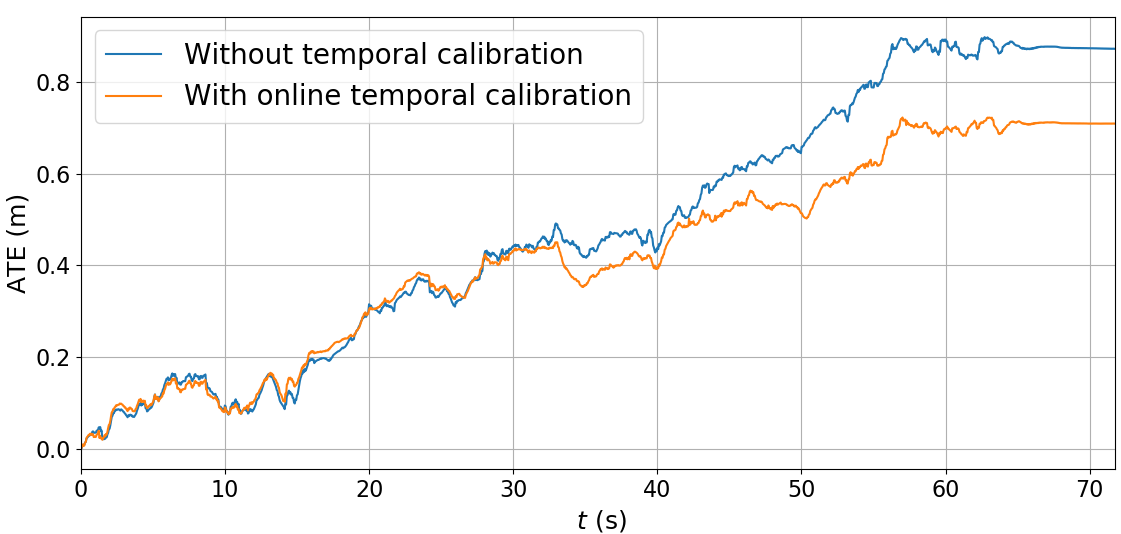

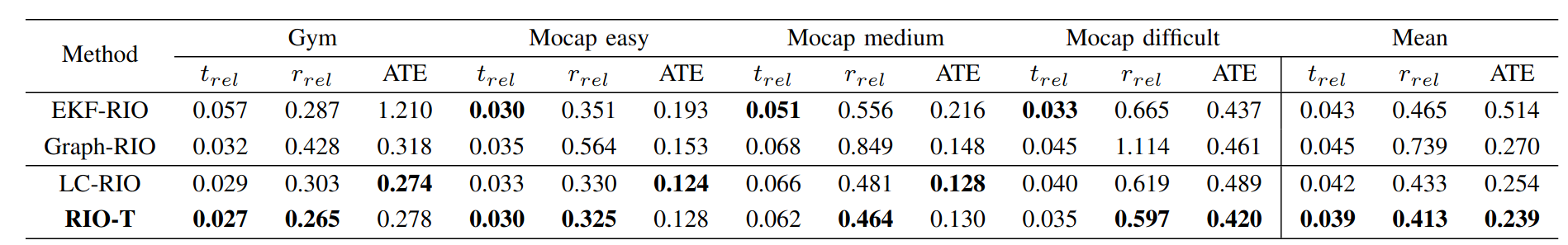

The results show the advantage of integrating the temporal offset into the radar measurements and the state, achieving an accuracy that can compete with other modern radar inertial odometry algorithms and in some cases even surpasses them. The effect of estimating the temporal offset is particularly evident in the most dynamic sequence, Mocap difficult, where omitting the offset leads to the largest discrepancies, reducing the ATE by 14.11%.

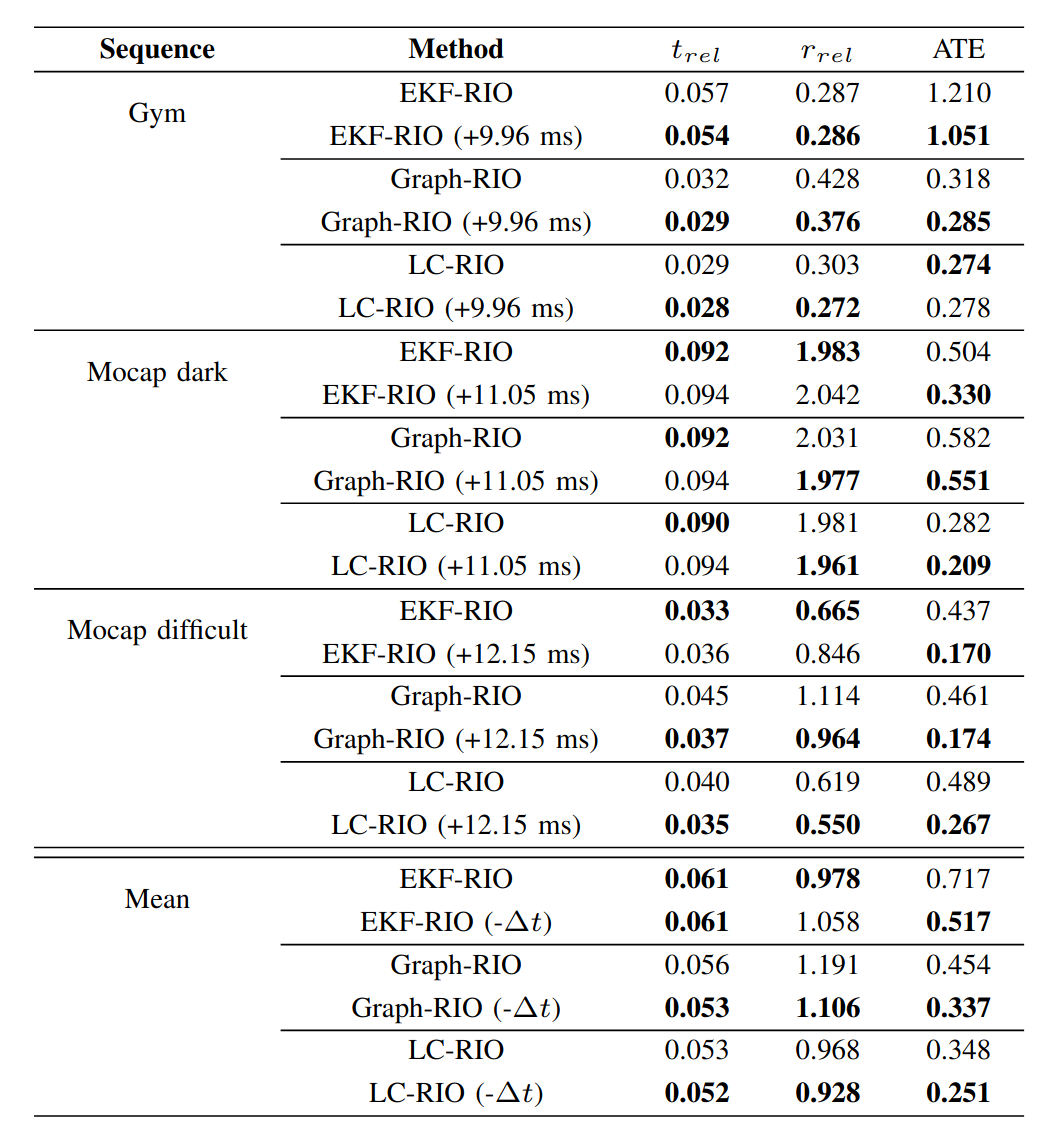

Taking into account and correcting the temporal offset between radar and IMU significantly improves the accuracy of state-of-the-art radar-inertial odometry methods. Time offsets were estimated using our proposed method.

Visualization of the results on the Mocap dark sequence.

@article{vstironja2025impact,

title={Impact of Temporal Delay on Radar-Inertial Odometry},

author={\v{S}tironja, Vlaho-Josip and Petrovi{\'c}, Luka and Per{\v{s}}i{\'c}, Juraj and Markovi{\'c}, Ivan and Petrovi{\'c}, Ivan},

journal={arXiv preprint arXiv:2503.02509},

year={2025}}

This research has been supported by the European Regional Development Fund under the grant PK.1.1.02.0008 (DATACROSS).